AWS Lambda Process S3 Objects

Processing S3 Objects

This is a continuation of the Using Lambda to Download to S3 post. See the previous post for relevant IAM configuration.

This post is going to talk through how we take action on a file that’s uploaded to your S3 bucket.

Setting up Lambda to trigger on S3 Event

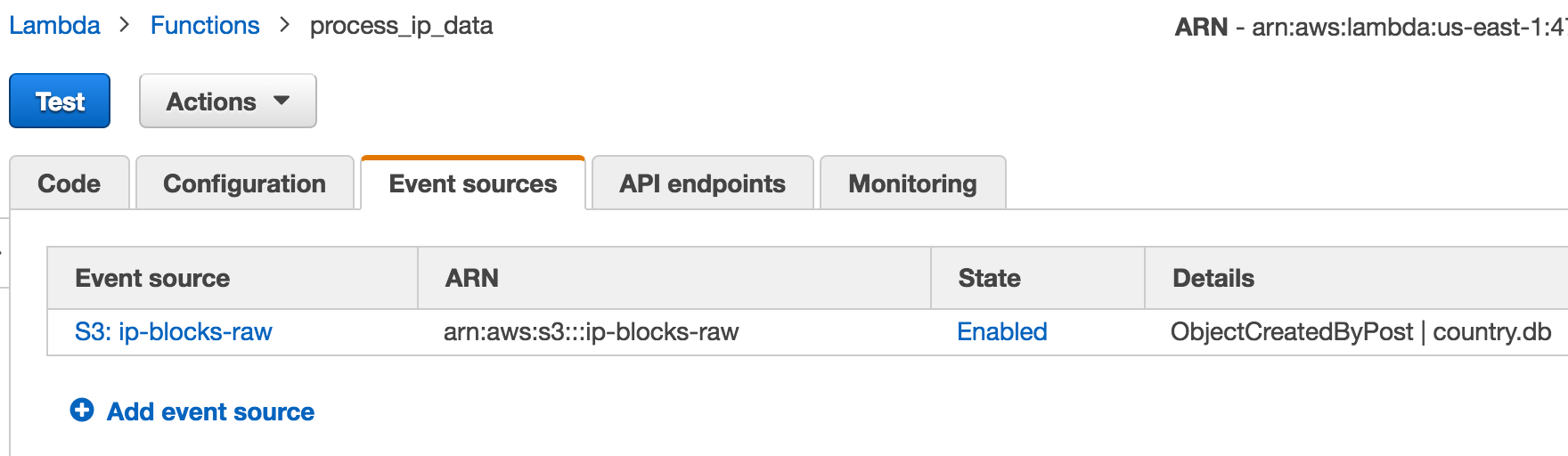

Do you trigger based on ObjectCreatedByPost or ObjectCreatedByPut? It’s ObjectCreatedByPost if you’re using boto3 to push your object to S3. Once your event source is configured, it should look something like this:

By clicking on the state, you can toggle whether it’s on or off.

Testing Lambda S3 Event

It wasn’t obvious to me how the S3 event data would be passed to the Lambda function. I wasn’t able to easily find how the information is passed in. AWS does provide an example event for Put that I’m going to include here for when you want to test your function. Part of me posting this is so I can reference it without having to go into the test configuration for the function to figure out what I need to look at.

{

"Records": [

{

"eventVersion": "2.0",

"eventTime": "1970-01-01T00:00:00.000Z",

"requestParameters": {

"sourceIPAddress": "127.0.0.1"

},

"s3": {

"configurationId": "testConfigRule",

"object": {

"eTag": "0123456789abcdef0123456789abcdef",

"key": "HappyFace.jpg",

"sequencer": "0A1B2C3D4E5F678901",

"size": 1024

},

"bucket": {

"ownerIdentity": {

"principalId": "EXAMPLE"

},

"name": "mybucket",

"arn": "arn:aws:s3:::mybucket"

},

"s3SchemaVersion": "1.0"

},

"responseElements": {

"x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH",

"x-amz-request-id": "EXAMPLE123456789"

},

"awsRegion": "us-east-1",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "EXAMPLE"

},

"eventSource": "aws:s3"

}

]

}

Edit this sample data to match up with how your objects and buckets will be named.

Processing IP information

Here is the code that will parse the IP information we collected in the previous post, and it will generate flat text files that include object groups that can be use for setting up rules and policies on Cisco ASA appliances:

from __future__ import print_function

import boto3

print('Loading function')

s3 = boto3.resource('s3')

def lambda_handler(event, context):

bucket = event['Records'][0]['s3']['bucket']['name']

object_key = event['Records'][0]['s3']['object']['key']

file_name = "/tmp/%s" % (object_key)

s3.Bucket(bucket).download_file(object_key, file_name)

d = {}

with open(file_name) as f:

for line in f:

(net, mask, cc) = line.split()

if cc in d:

d[cc].append({"net": net, "mask": mask})

else:

d[cc] = [{"net": net, "mask": mask}]

for key in d.iterkeys():

s3_name = "%s.txt" % (key)

file_content = generate_asa_object_groups(key, d[key])

s3.Bucket(bucket).put_object(Key=s3_name,

Body=file_content,

ContentType="text/plain")

def generate_asa_object_groups(cc, networks):

obj_group = "object-group network %s-ips" % (cc)

for network in networks:

obj_group += "\nnetwork-object %s %s" % (network["net"],

network["mask"])

return obj_group

If you’re using a different network appliance, you could replace the generate_asa_object_groups function with a function that would generate the proper syntax for your device.

Two important things to note in the code:

- We do not statically define any values in this script, we use the event to find the bucket name and object key.

- We add

ContentType="text/plain"to theput_objectstep so that S3 will serve the file as opposed to force browsers to download the object.

If you want to serve these files as static content from a S3 bucket, you can enable static web browsing and then add a bucket policy like the following:

{

"Statement": [

{

"Action": "s3:GetObject",

"Effect": "Allow",

"Principal": "*",

"Resource": "arn:aws:s3:::ip-blocks-raw/*",

"Sid": "PublicReadGetObject"

}

],

"Version": "2012-10-17"

}

If you want to see an example of how you may display, check out my country-obj-grp page. The front page is static, and everything it links to is updated via the AWS lambda jobs.